Do we want the current web applications to run faster without additional expenditures on better network connection or server infrastructure? Yes, of course, and that was the main goal for the developers of the HTTP/2 protocol.

To be more precise, it was about increasing the efficiency of communication between the client (in other words, the web browser) and the server, while maintaining maximum compatibility with currently operating applications:

HTTP/2 is intended to be as compatible as possible with the current uses of HTTP. This means, from the application perspective, the features of the protocol are mostly unchanged.

The known and liked structure of the HTTP/1.1 protocol was kept (we still have requests, responses, methods, headers or URIs described in this text), but the transport layer has been completely changed (optimised in terms of communication speed).

The first sector to see mismanagements of the HTTP/1.1 protocol is the text format of messages and how the same headers get sent repeatedly (requests or responses), including cookies.

HTTP/2 introduces binary communication format and advanced header compression. In principle, the way TCP transport protocol was used is completely changed; communication is carried out with one TCP connection, to which HTTP/2 frames are sent.

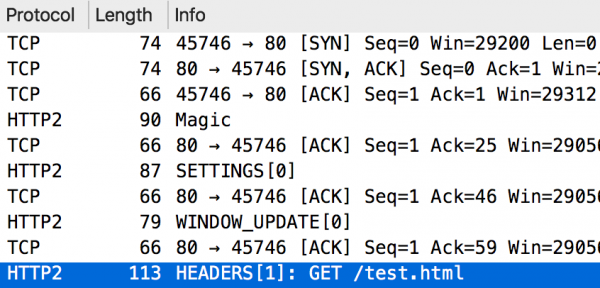

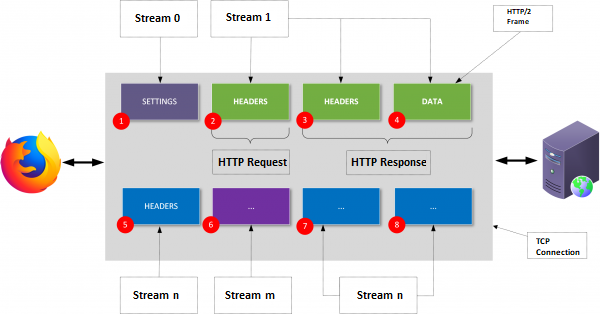

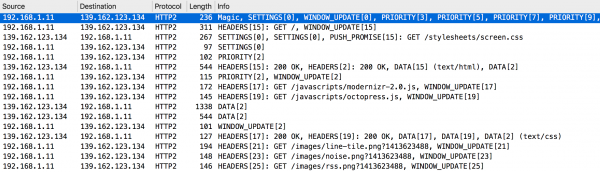

The frame makes up a relatively simple atomic structure of the HTTP/2 protocol. We have 10 types available. For example, in Figure 6, we see frames with the types SETTINGS, WINDOW_UPDATE, HEADERS. It is in these frames where we will see HTTP structures known from the version 1.1 protocol (request line, response status, headers, request body or response).

Frames, in turn, are organised into two-way streams. For example, in Figure 6, the HEADERS frame is in stream no. 1.

Several frames in one stream give us request-response communication already known from previous versions of HTTP protocol (see section 8.1, which was mentioned at the beginning of the RFC document text):

An HTTP request/response exchange fully consumes a single stream.

Comparison of communication with HTTP/1.1

Usage of the TCP protocol

Let’s start by looking at the usage of TCP by both versions of the HTTP protocol.

In both cases, the use of so-called permanent TCP connection is the default. The HTTP/1.1 specification (see https://tools.ietf.org/html/rfc7230) reads:

HTTP/1.1 defaults to the use of “persistent connections”, allowing multiple requests and responses to be carried over a single connection.

Is this behaviour required in HTTP/1.1? No. RFC says the same:

A client that does not support persistent connections MUST send the “close” connection option with every request message.

Communication using HTTP/1.1 consists of the HTTP client opening one or more TCP connections to the server. In each connection, the request-response pairs are sent. To send another HTTP request with a given TCP connection, we must wait for a response unless we use the so-called pipelining. This mechanism is described in section 6.3.2 of the previously mentioned RFC document (https://tools.ietf.org/html/rfc7230#section-6.3.2) where we read:

A client that supports persistent connections MAY “pipeline” its requests (i.e. send multiple requests without waiting for each response). A server (…) MUST send the corresponding responses in the same order as the requests were received.

In retrospect, we can say that the mechanism of pipelining in HTTP/1.1 has not worked in practice. Current modern Internet browsers do not support it at all. They use many parallel TCP connections for one server (in real conditions, it turned out to be less susceptible to errors and faster than the classic pipelining). Of course, in this case, you should also keep some restraint, letting browsers of such parallel connections open up to only a few. After all, each new TCP connection uses a 3-way handshake or additional resources needed to maintain the connection. If you use HTTPS, you have to remember that starting a TLS session is costly.

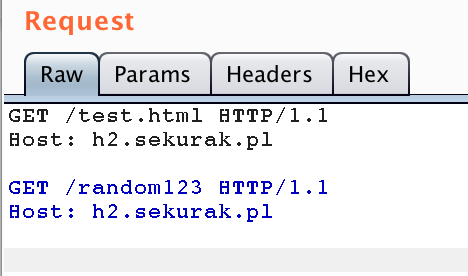

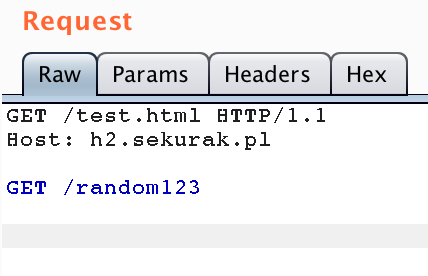

Does this mean that we can forget about pipelining and that this is just a historical curiosity? Definitely not. Many HTTP servers support this mechanism all the time, and it may have interesting security implications. Let’s see an example of this type of communication in the Burp Suite tool (the content of the file test.html is test1):

Figure 2. An example of HTTP communication using pipelining. Two independent HTTP responses are visible.

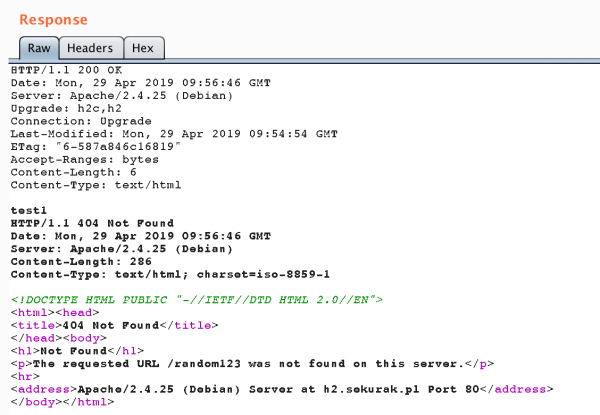

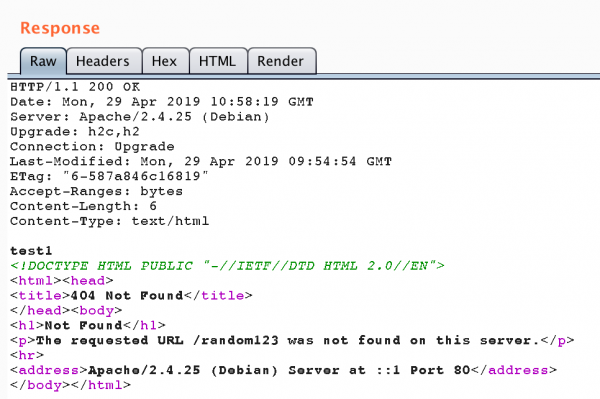

Even more interesting is the example shown in Figure 3. At first glance, you may think that this is a standard HTTP/1.1 communication. However, this is the use of pipelining while using HTTP/0.9 (GET /random123). Note that it is potentially possible to use this type of mechanism to bypass filters running before the application (including WAF – Web Application Firewall mechanisms). For example, if WAF prevents access to the resource, random123, by analysing the request line (GET /test.html HTTP/1.1) and additionally does not know HTTP/0.9 communication, then it may be possible to successfully send a WAF blocked request (looking for the application firewall) as the body of the request.

To improve communication efficiency, instead of the ‘not very successful’ pipelining, the new version of the HTTP protocol introduces another mechanism – multiplexing. In one HTTP/2 connection, we can have many frames, including headers or bodies (requests or responses). Frames are organised in the so-called streams (corresponding to the request-response pair). The order of frames (organised into streams) is relatively arbitrary, in particular, to send another request, and it is not necessary to wait for the previous answer.

To see the details of this type of structure, let’s take a closer look at the communication with the HTTP/1.x and HTTP/2 protocols.

Basics of HTTP/2 communication

Let’s see a simple example of communication with the HTTP server:

|

1 |

curl http://h2.sekurak.pl/test.html |

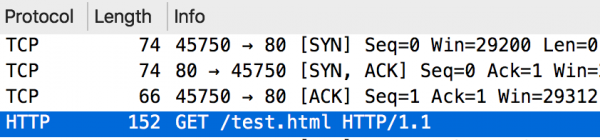

Nothing outstanding here. First, a TCP (3-way handshake) connection is made, and then a full HTTP request is sent immediately:

The same request in the HTTP/2 protocol will look a bit more complicated:

|

1 |

curl --http2-prior-knowledge http://h2.sekurak.pl/test.html |

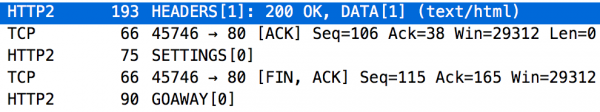

As we can see, the communication starts again with the establishment of the TCP connection (3-way handshake), but until the HTTP request is sent, we have to wait (this is the last line in Figure 6). You can also see two streams here (their identifiers are in square brackets directly after the type of the frame). The zero stream is used to send control parameters of the entire connection. However, the stream with ID 1 was created with the HEADERS frame. The HTTP response is shown in Figure 7. Here, we see the HEADERS frame (response headers) and the DATA frame (response body). A little later, there is a request to close the entire TCP connection (GOAWAY frame).

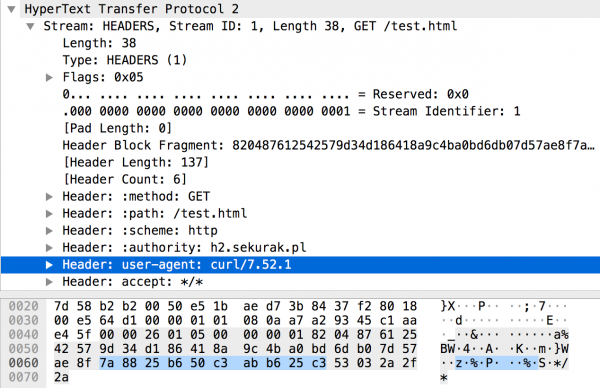

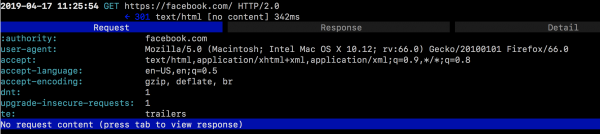

Same headers in HTTP/2 might also seem a bit more complicated than in HTTP/1.1. In Figure 8, we can see user-agent or accept headers (stored in binary form), but there are also so-called pseudo headers that start with a colon character, e.g. “:” method.

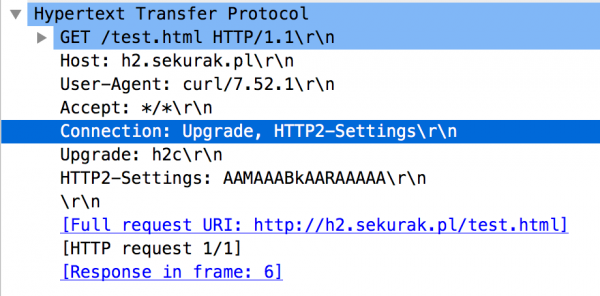

To make the whole thing even more complicated, it is also possible to communicate with the HTTP/2 protocol starting with the classic HTTP/1.1 communication, containing several additional headers, including Upgrade. This variant is very often used when the client does not know whether the server supports the HTTP/2 protocol. The browser tries to connect using HTTP/1.1 and then immediately switches to HTTP/2. An example of the initiation of such communication can be seen in Figure 9.

From the list of more interesting and additional mechanisms offered by HTTP/2, it is also worth mentioning the possibility of the server sending additional data to the client (the so-called server push mechanism). For example, the server, apart from the content of the test.html file, could immediately send us the image files it refers to. Finally, we can also easily break the communication without disconnecting the TCP connection (the RST_STREAM frame is responsible for this).

Slightly simplifying, HTTP/2 communication very often looks like this:

- Setting the connection parameters: in stream number 0,

- Sending an HTTP request: in the HEADERS frame and possibly in the DATA frame (if the request contains a body),

- Sending the HTTP response: in the HEADERS frame and possibly in the DATA frame (if the answer includes the body).

Security

Mandatory TLS?

To begin with, it is worth emphasising that HTTP/2 very often uses TLS (this protocol is marked as h2; popular browsers such as Chrome or Firefox only implement this version of HTTP/2). The protocol specification requires at least version 1.2 TLS.

As we have seen earlier in the example of curl, there is also the possibility of HTTP/2 operation using normal TCP (i.e. without additional security). Here, we can find the term h2c (or HTTP/2 over plaintext). This variant is more friendly for both tests and for attackers.

When designing HTTP/2, we immediately tried to prevent certain ‘childhood diseases’ (manifesting, among others, in the vulnerability of CRIME in the SPDY protocol being the precursor of HTTP/2); see: https://legacy.gitbook.com/book/bagder/http2-explained/details). That’s why the HPACK algorithm was used to compress headers (https://tools.ietf.org/html/rfc7541).

The complexity of the protocol

As we have seen before, HTTP/2 is a somewhat complicated protocol (the specification itself has nearly 100 pages: https://tools.ietf.org/html/rfc7540), and remember that it does not change the whole, rather complex message structure (requests and answers) known from HTTP 1.1 (https://tools.ietf.org/html/rfc7230). Additionally, we have defined some extensions (e.g. Alternative Services: https://tools.ietf.org/html/rfc7838) or curiosities (e.g. Opportunistic Security for HTTP/2: https://tools.ietf.org/html/rfc8164). HPACK itself is a separate RFC document (https://tools.ietf.org/html/rfc7541).

All this means that some implementation problems can be expected. At this point (July 2019), they are usually errors of “only” the DoS class. A few examples below:

- Several DoS class vulnerabilities (including IIS 10, Nginx, Jetty, nghttpd, Wireshark): “HTTP/2: In-depth analysis of the top four flaws of the next generation web protocol”: http://www.imperva.com/docs/Imperva_HII_HTTP2.pdf

In the study, we can find a description of several types of vulnerabilities.

The first one is relatively easy to use (CVE-2016-0150) in IIS with the final effect, blue screen of death: https://portal.msrc.microsoft.com/en-US/security-guidance/advisory/CVE-2016-0150). The attack required sending two HTTP requests in one stream. It is worth recalling the part of Chapter 8.1 of the RFC document (https://tools.ietf.org/html/rfc7540#section-8.1https://tools.ietf.org/html/rfc7540) stating that the new HTTP request must be sent in a completely new stream:

A client sends an HTTP request on a new stream, using a previously unused stream identifier.

Another issue we discuss in the Imperva study is the so-called slow read. To some extent, this DoS attack is based on the implementation of free HTTP/2 communication (e.g. 1 byte in one frame), which can force a significant allocation of resources on the HTTP server side, e.g. when it allocates one thread to support one stream. Finally, here, it is possible to block access to the server by other clients with just one, rather less active, TCP connection.

Yet another example is a variant of the class of decompression bombs, which is aimed at the HPACK algorithm. In this case, the attacker prepares a sufficiently large HTTP request (it can be, for example, a header with a correspondingly large length), which compresses very effectively. Realistically, therefore, the compressed HTTP/2 communication will be small, but after unpacking by the server, it will fill its memory. An interesting variant of this vulnerability is DoS for a client application (see DoS in Wireshark: https://www.wireshark.org/security/wnpa-sec-2016-05.html)

- DoS in Windows Server / Windows 10: https://portal.msrc.microsoft.com/en-us/security-guidance/advisory/ADV190005

In this case, it was possible to send an excessive amount of appropriately crafted SETTINGS frames, which Microsoft describes in this way:

In some situations, excessive settings can cause services to become unstable and may result in a temporary CPU usage spike until the connection timeout is reached and the connection is closed.

Interestingly, a very similar bug was also fixed in Tomcat (http://tomcat.apache.org/security-8.html#Fixed_in_Apache_Tomcat_8.5.38).

- DoS in Nginx: http://mailman.nginx.org/pipermail/nginx-announce/2018/000220.html

- DoS at Node.js: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2018-7161,

- DoS at F5 BIG-IP: https://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2018-5514,

- DoS at HAProxy https://www.mail-archive.com/haproxy@formilux.org/msg31253.html,

Sometimes, you can also find the potential for code execution in the operating system (see vulnerability in Apache Traffic Server: https://yahoo-security.tumblr.com/post/122883273670/apache-traffic-server-http2-fuzzing).

Known vulnerabilities once again

It is also worth remembering about the somewhat unexpected vulnerabilities, from which HTTP/1.1 communication has already been secured. An example of this type of problem is the vulnerability of CVE-2017-7675 in Tomcat (see: http://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2017-7675):

The HTTP/2 implementation bypassed a number of security checks that prevented directory traversal attacks. It was, therefore, possible to bypass security constraints using a specially crafted URL.

So, if I communicate with the server through HTTP/1.1 protocol, everything is fine, but if, in principle, the same communication will be done via HTTP/2, maybe I will be able to use the path traversal.

WAF/IDS

Remember that in live scenarios, we will most often deal with encrypted HTTP/2 (h2) protocol. For WAF (Web Application Firewall) systems, this is the first problematic element. Besides, after decrypting the HTTP/2 protocol, the analysis is completely different than HTTP/1.1. Maybe we have WAF, but we have not enabled HTTP/2 protocol support. Then there is a chance that our system will manage to circumvent entirely, e.g. by placing attacks in HTTP/2 frames.

A common solution is terminating an HTTP/2 connection on a WAF class system that also works as a reverse HTTP proxy. Therefore, the proxy terminates the encrypted HTTP/2 connection, translates the communication to HTTP/1.1, analyses it for threats and sends it to the final servers securely. Of course, if we already use HTTP/2, then it would be optimal to use it anywhere (also in the communication between WAF and target HTTP servers), but for now (April 2019), such solutions are just beginning to appear on the market.

What’s next?

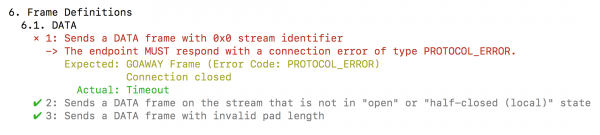

The authors of the HTTP/2 standard have devoted the entire section (see: https://tools.ietf.org/html/rfc7540#page-69, https://tools.ietf.org/html/rfc7541#page-19, chapter “Security considerations”) to possible security issues. Do the people strictly follow the recommendations of this section in implementing HTTP/2? It may be different, especially since it is quite difficult to find a 100% compatible server with HTTP/2 RFCs. An example of this type of incompatibility can be seen in Figure 11.

Readers interested in low-level HTTP/2 security testing can look for inspiration in work, “Attacking HTTP/2 Implementations” (https://yahoo-security.tumblr.com/post/134549767190/attacking-http2-implementations), whose authors provided the HTTP2 fuzzer (https://github.com/c0nrad/http2fuzz). If someone prefers Python, httpooh is also a possibility (https://github.com/artem-smotrakov/httpooh). In this context, it is also worth mentioning the honggfuzz tool developed by Robert Święcki (https://github.com/google/honggfuzz).

Tools

Currently (April 2019), there are few tools useful for people involved in IT security and supporting HTTP/2. With such support, the previously mentioned curl tool is available.

The HTTP/2 client available as part of the nghttp2 package is also recommended (https://github.com/nghttp2/nghttp2). It also enables a fairly accurate communication analysis in the case of the h2 protocol (using TLS). Several minor parts have been removed from the listing:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

$ nghttp -vn http://h2.sekurak.pl/test.html [ 0.302] Connected [ 0.302] send SETTINGS frame <length=12, flags=0x00, stream_id=0> (niv=2) [SETTINGS_MAX_CONCURRENT_STREAMS(0x03):100] [SETTINGS_INITIAL_WINDOW_SIZE(0x04):65535] [ 0.303] send HEADERS frame <length=46, flags=0x25, stream_id=13> ; END_STREAM | END_HEADERS | PRIORITY (padlen=0, dep_stream_id=11, weight=16, exclusive=0) ; Open new stream :method: GET :path: /test.html :scheme: http :authority: h2.sekurak.pl accept: */* accept-encoding: gzip, deflate user-agent: nghttp2/1.18.1 [ 0.435] recv SETTINGS frame <length=6, flags=0x00, stream_id=0> (niv=1) [SETTINGS_MAX_CONCURRENT_STREAMS(0x03):100] [ 0.435] recv SETTINGS frame <length=0, flags=0x01, stream_id=0> ; ACK (niv=0) [ 0.435] recv WINDOW_UPDATE frame <length=4, flags=0x00, stream_id=0> (window_size_increment=2147418112) [ 0.435] recv (stream_id=13) :status: 200 [ 0.435] recv (stream_id=13) date: Mon, 15 Apr 2019 19:47:53 GMT [ 0.435] recv (stream_id=13) server: Apache/2.4.25 (Debian) [ 0.435] recv (stream_id=13) last-modified: Mon, 15 Apr 2019 14:28:10 GMT [ 0.435] recv (stream_id=13) etag: "5-58692763f99d8" [ 0.435] recv (stream_id=13) accept-ranges: bytes [ 0.435] recv (stream_id=13) content-length: 5 [ 0.435] recv (stream_id=13) content-type: text/html [ 0.436] recv HEADERS frame <length=104, flags=0x04, stream_id=13> ; END_HEADERS (padlen=0) ; First response header [ 0.436] recv DATA frame <length=5, flags=0x01, stream_id=13> ; END_STREAM [ 0.436] send GOAWAY frame <length=8, flags=0x00, stream_id=0> (last_stream_id=0, error_code=NO_ERROR(0x00), opaque_data(0)=[]) |

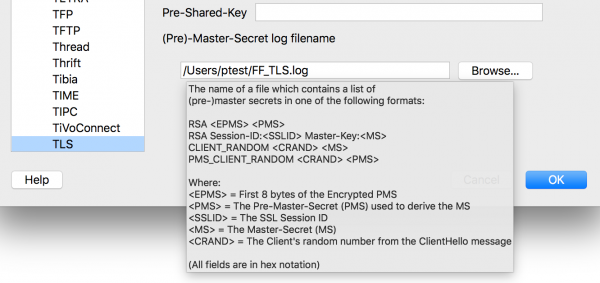

We can conduct an even more detailed analysis (at the frame content level) in the Wireshark tool. However, the question remains – how to decipher TLS? Wireshark enables the provision of cryptographic material, allowing such operation (see Figure 12).

How to create the file indicated in Figure 12? Just before launching the browser (Firefox or Chrome), set the appropriate environment variable:

|

1 2 |

$ export SSLKEYLOGFILE=~/FF_TLS.log $ ./firefox |

An exemplary analysis of the result of the connection with the domain, http://nghttp2.org/, is shown in Figure 13.

Figure 13. Wireshark – HTTP2 communication analysis.

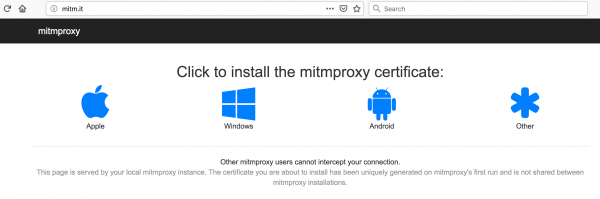

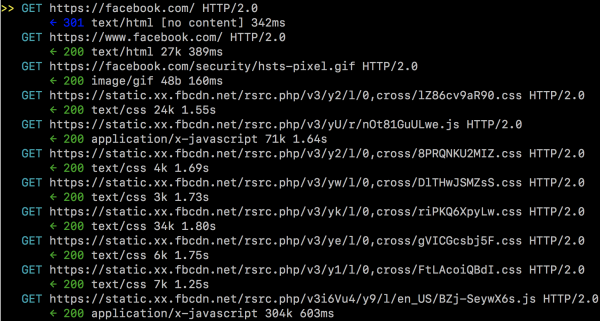

If someone would like to capture/modify live HTTP/2 communication from the browser, he can use the console tool, mitmproxy (https://mitmproxy.org/), to do this. Mitmproxy can work in several modes, including as a standard HTTP proxy.

This is how the tool is launched:

|

1 |

$ mitmproxy –host |

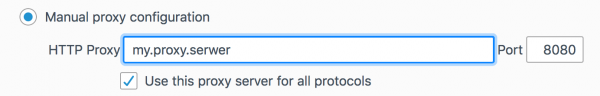

Next, we configure the proxy settings in the browser to the IP address of the machine on which mitmproxy works (listening on port 8080 by default):

We install the proposed CA root certificate (remember to do it carefully, preferably only in the browser used for testing). It will give us the ability to intercept h2 communication easily.

After entering a specific service (including the HTTP2 service provider), we can already see specific requests with details (see Figures 16 and 17).

Summary

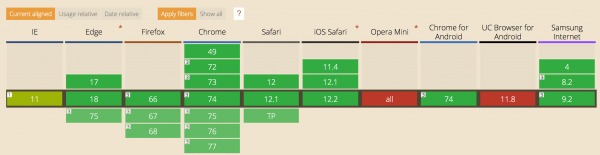

Was the project named HTTP/2 successful? Probably yes. Currently (July 2019), protocol support provides an overwhelming number of web browsers:

Moreover, about 35% of all web services also allow communication with the use of HTTP/2 (see https://w3techs.com/technologies/details/ce-http2/all/all; it is worth noting that the most common option is communication using the previous HTTP versions).

As we have seen, from the security side, the entire entity looks solid, but let’s not forget about backward compatibility. In this case, vulnerabilities that can be used in HTTP/1.x have a high probability to also occur in HTTP/2. In particular, it is worth paying attention to application vulnerabilities – the use of SQL Injection or XXE will look the same in both versions of the HTTP protocol – only the low-level way of sending the attack will change. The meanies would say, “The attacks can be carried out a bit faster”. Finally, the speed of action is the main goal of HTTP/2 development.

Does this mean that the years to come mark a domination era for the HTTP/2? Some point out the slight performance benefits of this protocol under certain conditions. The fact is also the higher performance of HTTP/1.x in networks with packet losses (see the work, “HTTP3 explained”: https://daniel.haxx.se/http3-explained/ and “HTTP/2: What no one’s telling you”: https://www.slideshare.net/Fastly/http2-what-no-one-is-telling-you).

The solution to this problem will be the new HTTP/3 protocol, which is currently undergoing standardisation (see: https://quicwg.org/base-drafts/draft-ietf-quic-http.html). In the transport layer, it is based on the UDP protocol and draws full sets of HTTP/2 specifications. If everything goes as planned, it really will be a fast and safe successor to HTTP/1.x.